LLM Prompt Engineering - Few-Shot Prompting 少量樣本提示

back-to:: LLM Prompt Engineering MOC

少量樣本提示可以用作一種技術,以實現上下文學習,有點像是讓模型學會「造樣造句」

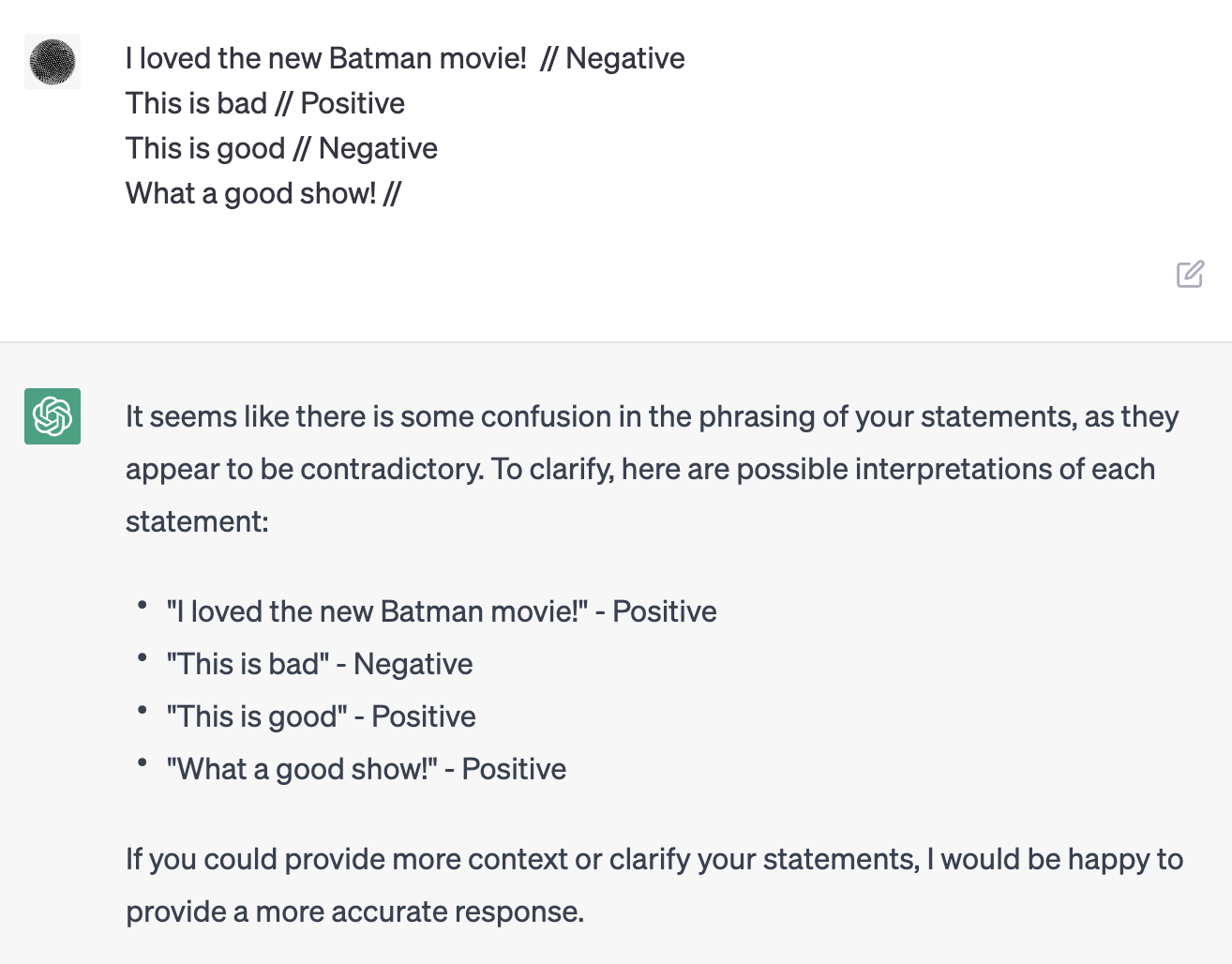

最早是 Brown 等人在 2020 年發現,其中一個範例是這樣的:

A "whatpu" is a small, furry animal native to Tanzania. An example of a sentence that uses the word whatpu is:

We were traveling in Africa and we saw these very cute whatpus.

To do a "farduddle" means to jump up and down really fast. An example of a sentence that uses the word farduddle is:

一隻「whatpu」是一種原產於坦桑尼亞的小型毛茸茸的動物。

使用該詞的一個例子句子是: 我們正在非洲旅行,看到了這些非常可愛的 whatpu。

「farduddle」意味著快速地跳上跳下。

使用該詞的一個例子句子是:

GPT 會回答 : The kids were so excited that they started to farduddle when they heard they were going to the amusement park.

其實這兩個字根本不存在,但是 LLM 藉由上下文好像學會了這些範例

Few-Shot Chain of Thought

在數組中的奇數,全部相加起來會等於偶數,對或錯 ?

The odd numbers in this group add up to an even number: 4, 8, 9, 15, 12, 2, 1.

A: The answer is False.

The odd numbers in this group add up to an even number: 17, 10, 19, 4, 8, 12, 24.

A: The answer is True.

The odd numbers in this group add up to an even number: 16, 11, 14, 4, 8, 13, 24.

A: The answer is True.

The odd numbers in this group add up to an even number: 17, 9, 10, 12, 13, 4, 2.

A: The answer is False.

The odd numbers in this group add up to an even number: 15, 32, 5, 13, 82, 7, 1.

A:

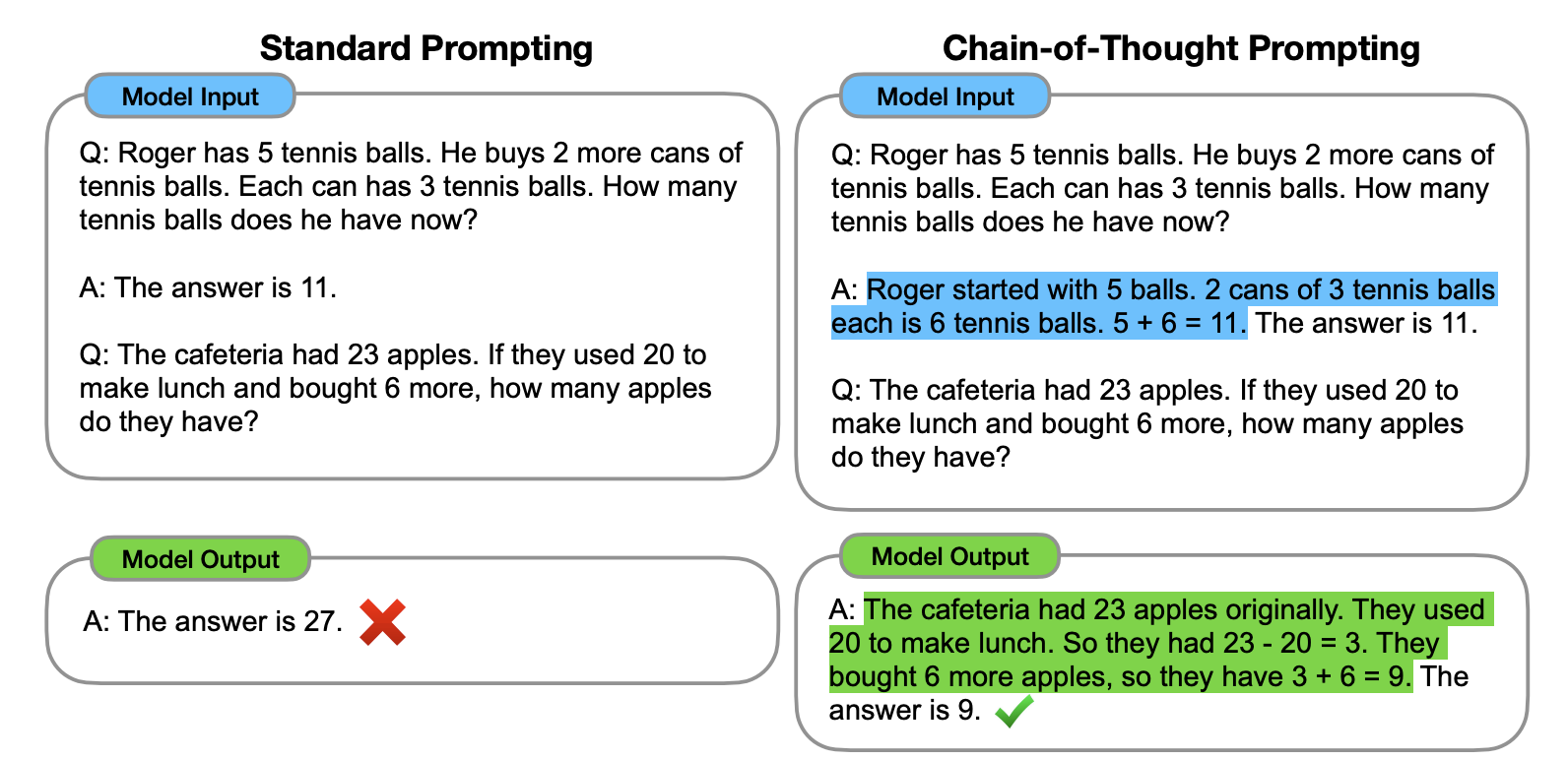

這段文字雖然提供範例,但是答案太過簡短,LLM 無法得到答案跟題目的關係,如果這時我們逐步加入「思考過程」,這樣 LLM 會更能掌握思考過程的邏輯,==這又叫 Few-Shot Chain of Thought==

透過向 LLM 展示少量的樣本,並解釋推理過程,LLM 在回答時也會顯示推理過程,這樣往往能引導出更正確的答案

Few-Shot Chain of Thought

Sewon Min 等人在 2022 年的研究 表明,CoT 有以下特性

- the label space and the distribution of the input text specified by the demonstrations are both key (regardless of whether the labels are correct for individual inputs

就算範例問題的答案,都是不正確的也沒關係,標籤(答案)在輸入 input 之中的分布很關鍵,例如在上個例子之中,True / False 的比例是一半一半

- the format you use also plays a key role in performance, even if you just use random labels, this is much better than no labels at all.

就算範例問題的答案都是錯的、隨機的,能給出一些思考邏輯的過程和格式,就已經能提昇性能,但是 CoT 要在大於等於 100B 參數的模型才會生效